I am currently a tenure-track assistant professor at the School of Artificial Intelligence, Shanghai Jiao Tong University, leading the EPIC (Efficient and Precision Intelligent Computing) laboratory, with qualifications to supervise master's and doctoral students. Previously, I obtained my Ph.D. in June 2024 from the Institute for Interdisciplinary Information Sciences at Tsinghua University, under the supervision of Associate Professor Kaisheng Ma. During my doctoral studies, I received the Microsoft Fellowship (one of twelve in the Asia-Pacific region annually), the Beijing Outstanding Graduate, the Tsinghua University Outstanding Doctoral Thesis, the Tsinghua University Qihang Gold Medal, and the Tsinghua University Jiang Nanxiang Scholarship. During my Ph.D., I published over twenty high-quality academic papers, with thirteen as the first author, and my papers have been cited more than 2200 times in total (as of November 2024). My research results have been applied in companies such as Polar Bear Technology, Huawei, and Core Technology Research Institute of Interdisciplinary Information. Since 2020,

Our laboratory is recruiting undergraduate or graduate research assistants and students for the class of 2026. If you are interested, please check our recruitment post.

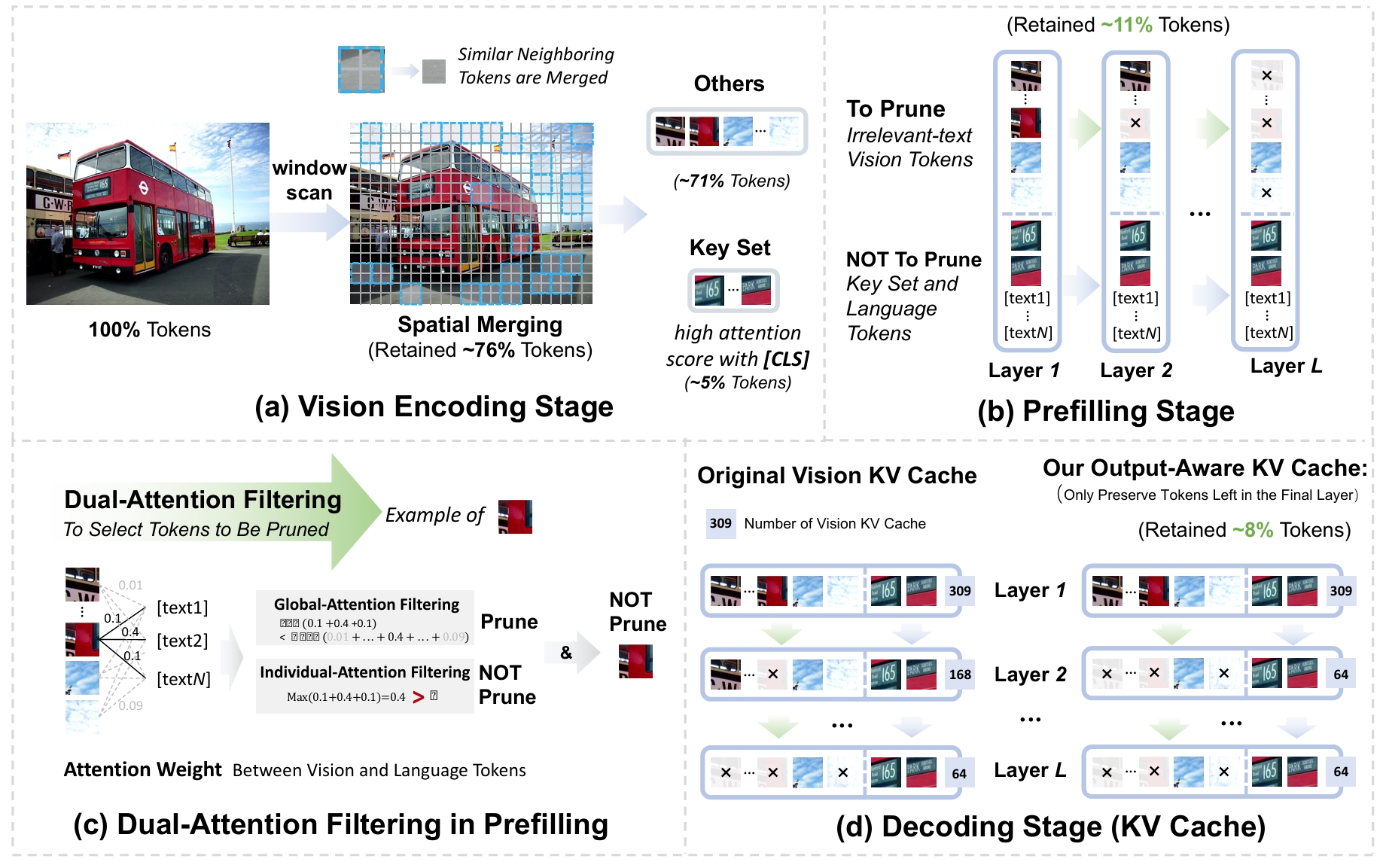

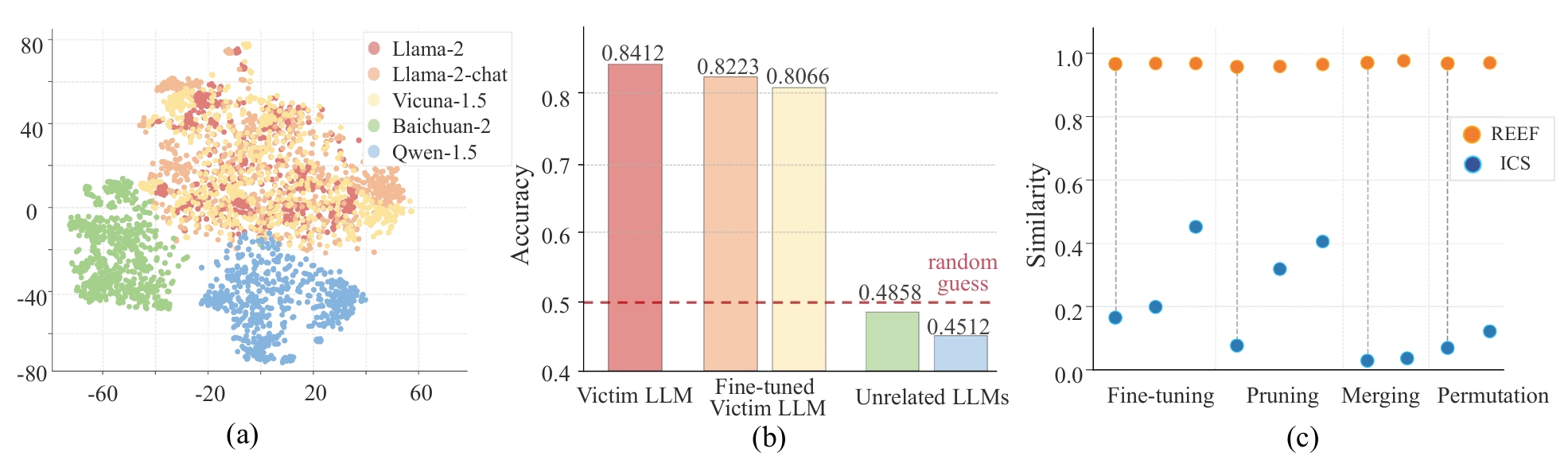

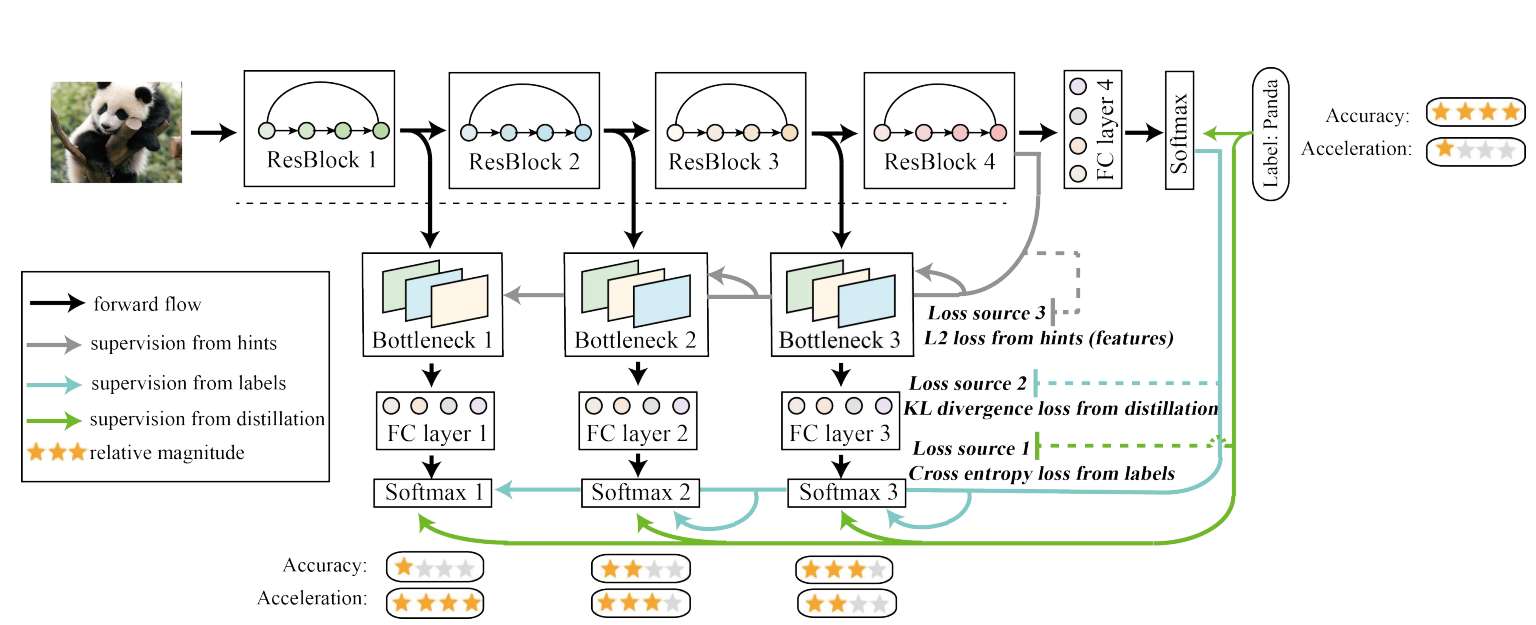

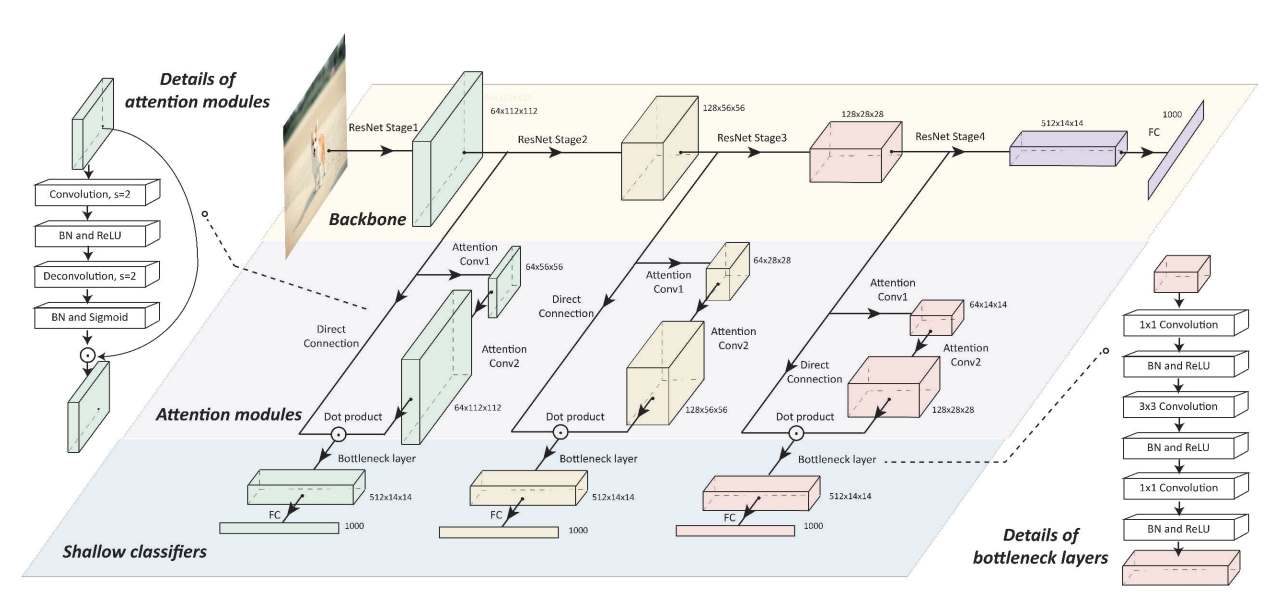

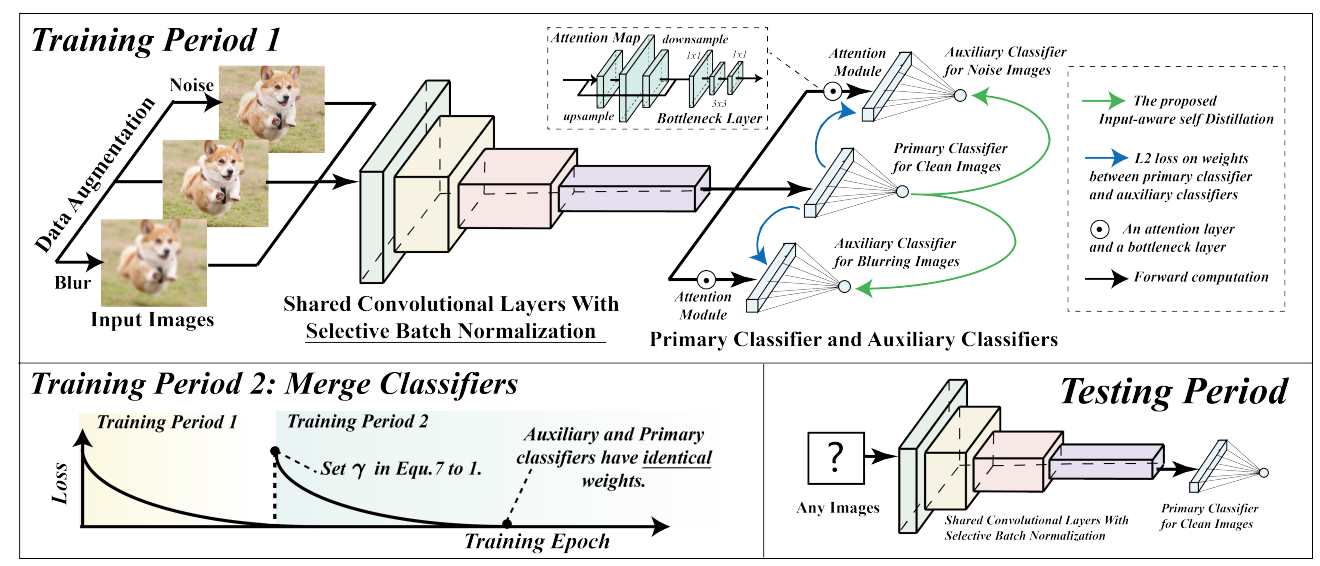

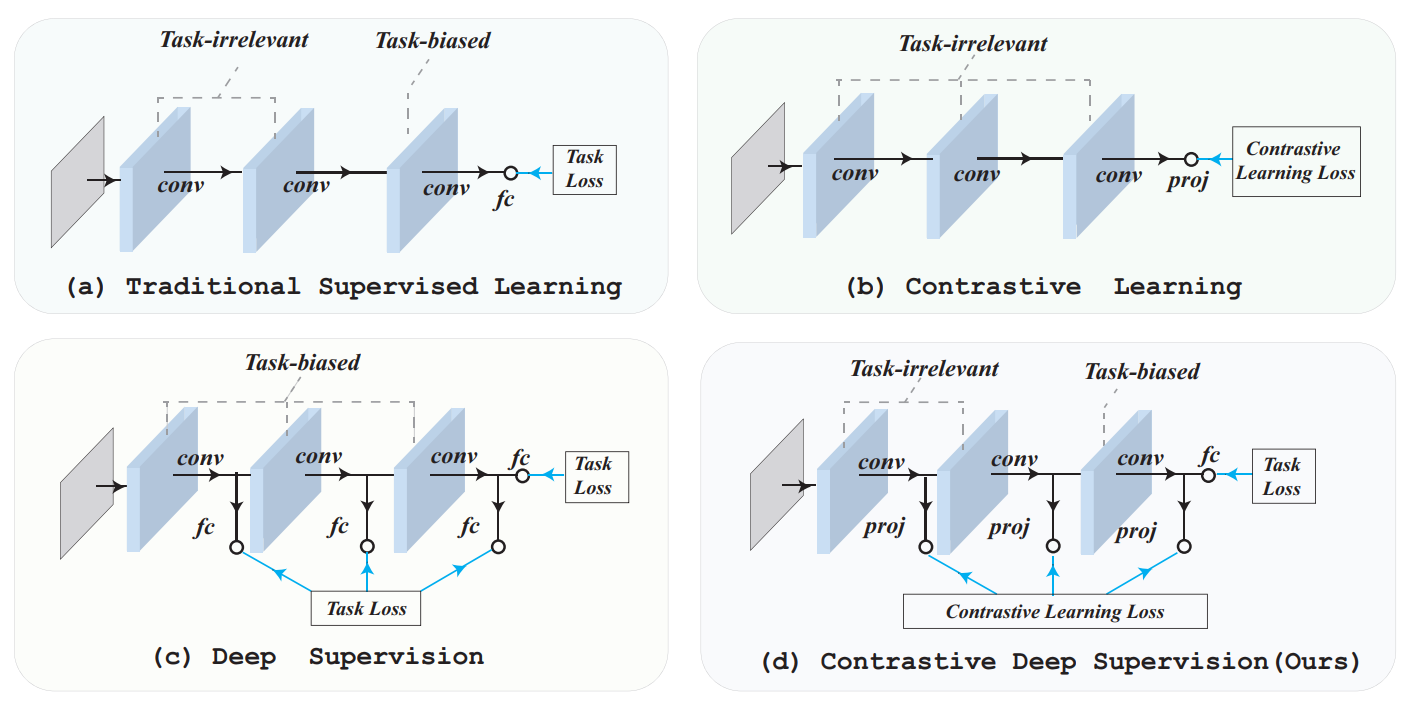

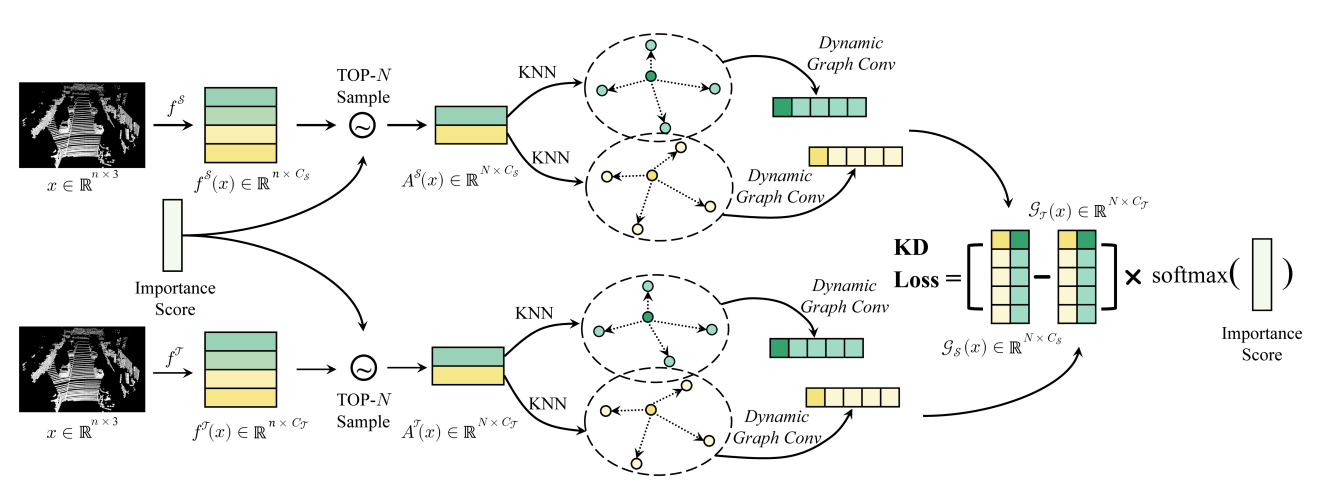

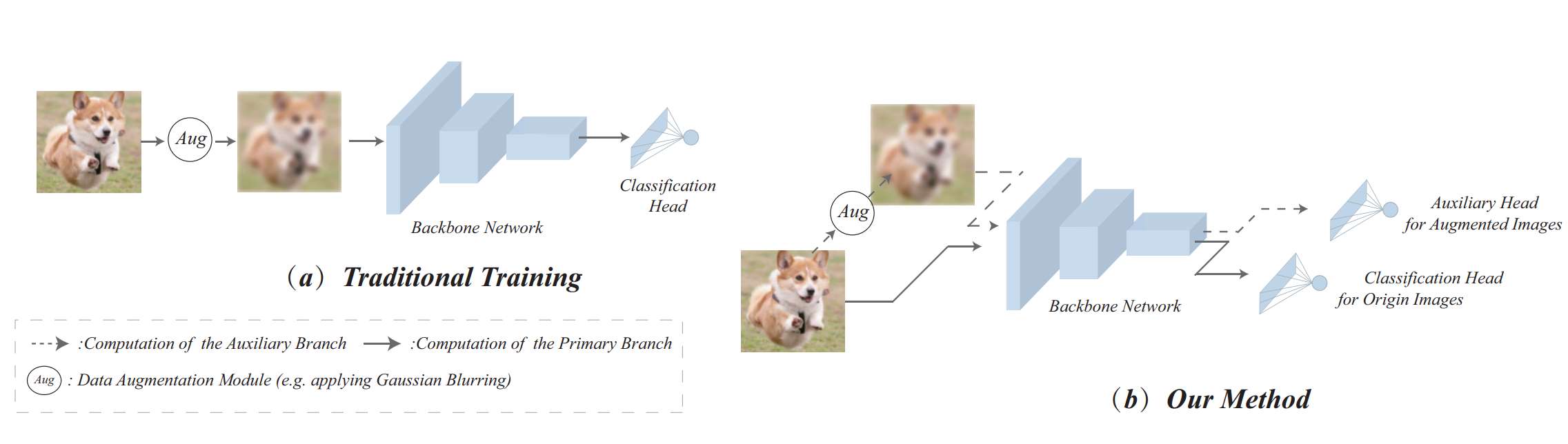

I. Lightweight and Efficient Large Models for Language/Multimodality: The current generative large models have billions of parameters, leading to extremely high training and inference costs, causing many problems. For example, OpenAI once limited its users to pay for ChatGPT4 due to the inability to bear the computational costs. By researching compression and acceleration methods for generative large models, we can reduce the deployment costs of large models, enabling them to be better utilized in the real world. At the same time, how to make small models possess the same representational capabilities as large models is also one of the fundamental core issues in artificial intelligence.

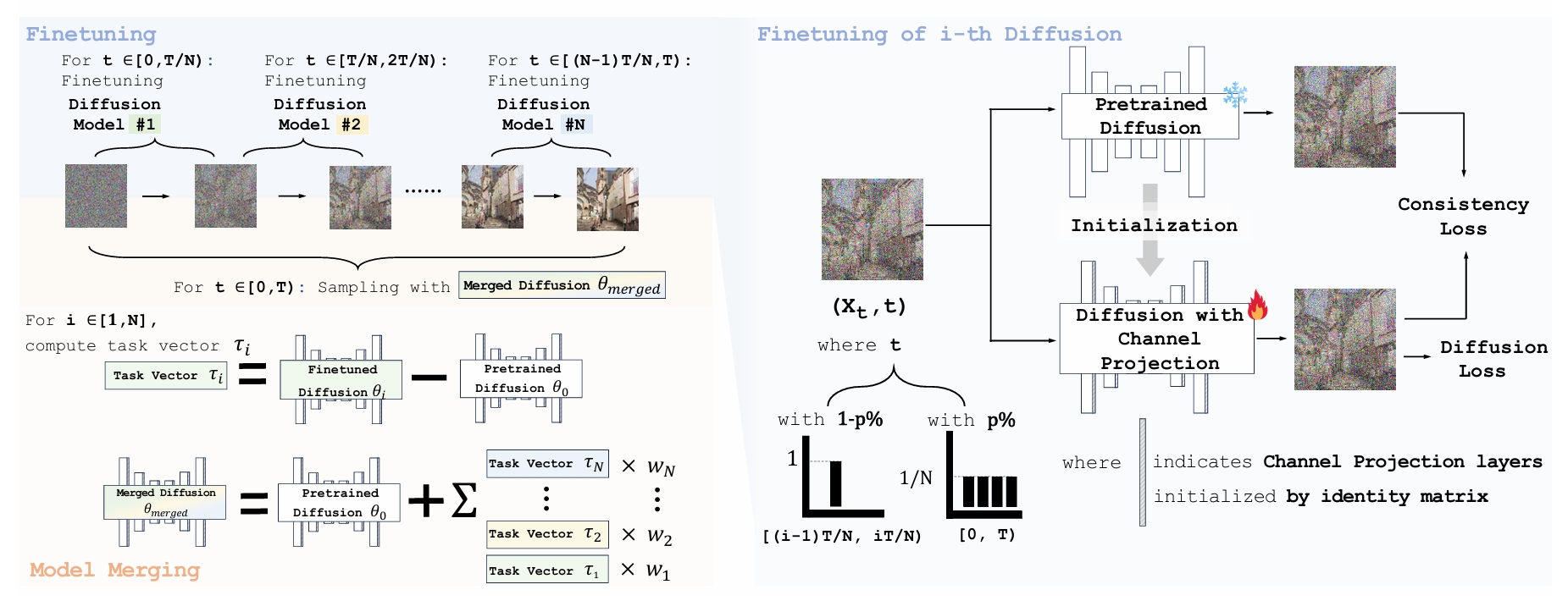

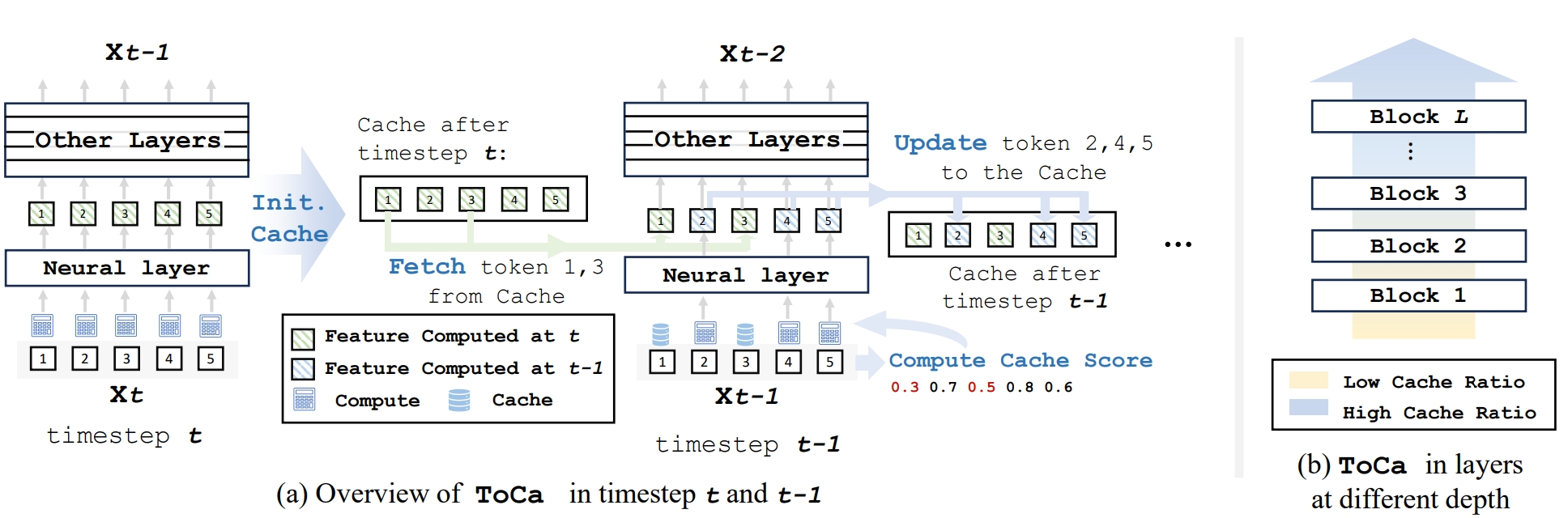

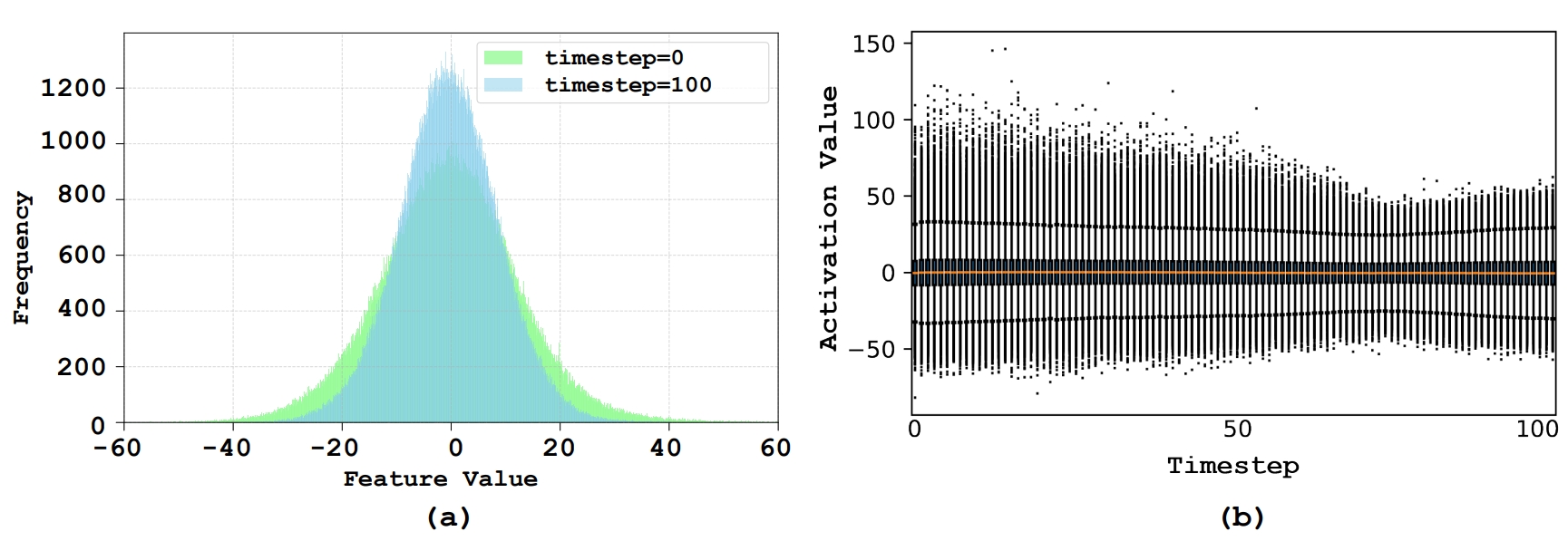

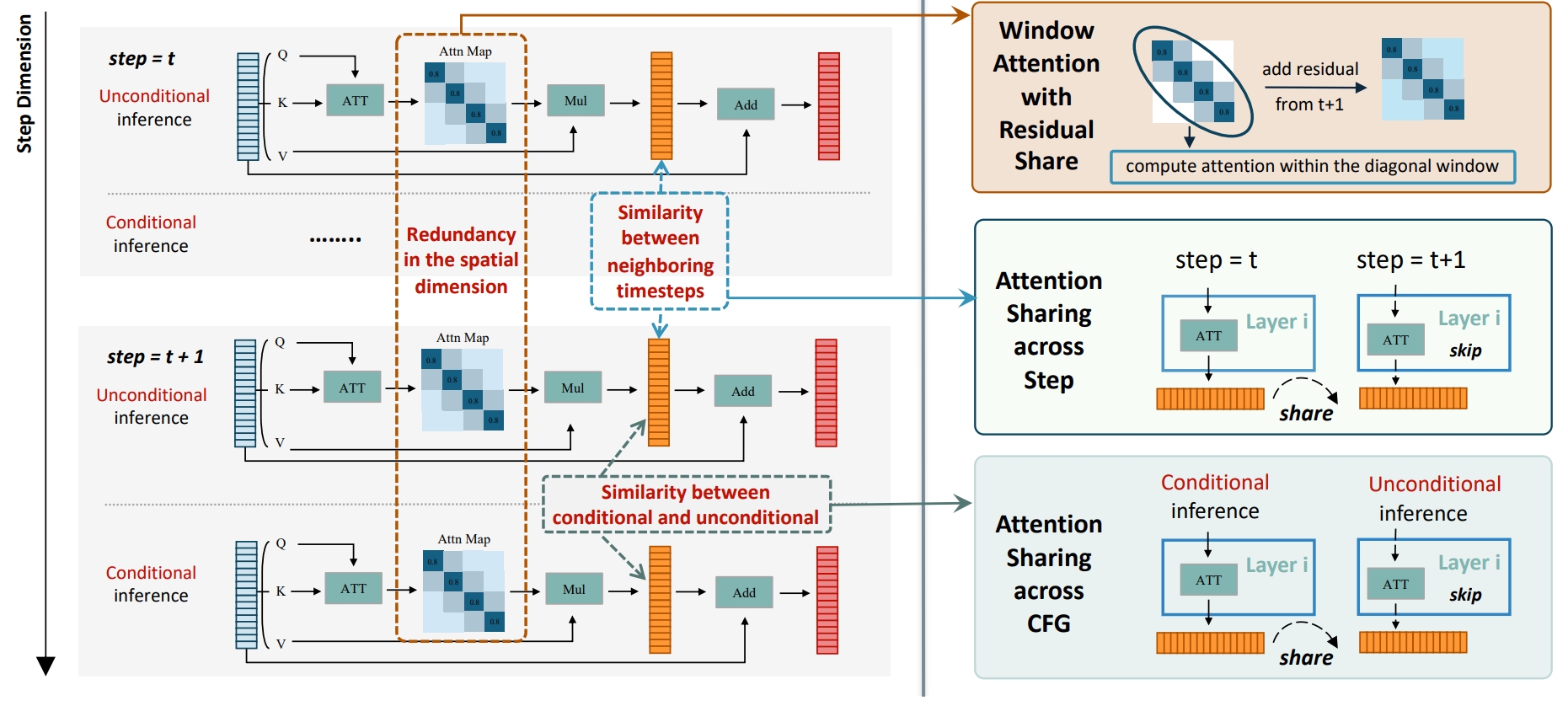

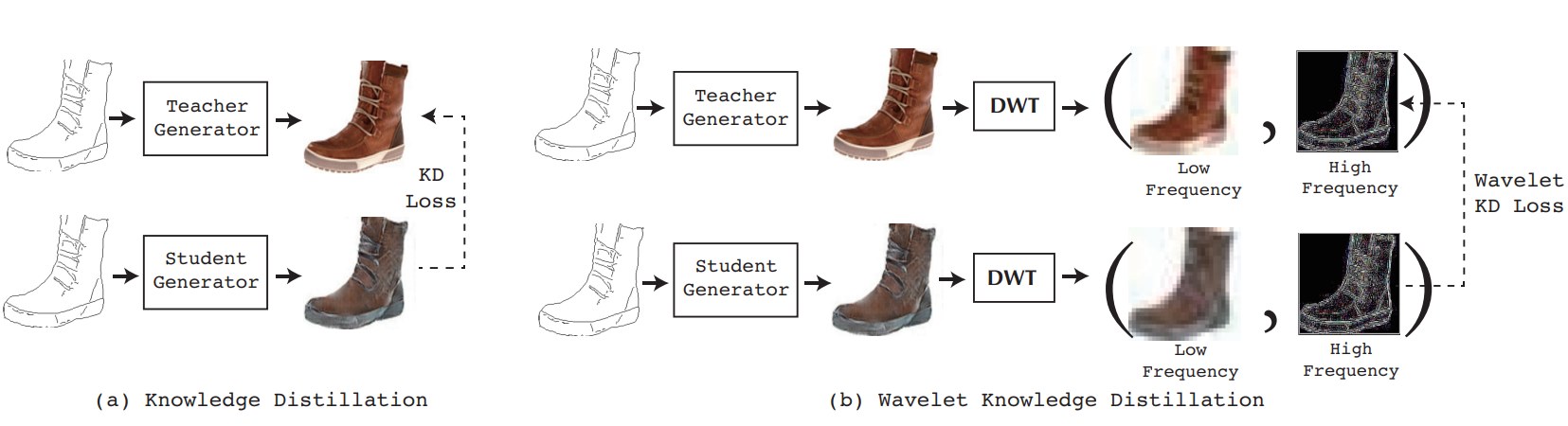

II. Lightweight and Efficient AIGC Models: The text-to-image and text-to-video models represented by Stable Diffusion and Sora have sparked a wave of AIGC (Artificial Intelligence Generated Content). However, the computational costs of high-resolution images and long videos are often extremely high, making it difficult to truly apply them to industrial applications. To address this issue, we are committed to realizing efficient visual generation models to promote the industrialization of AIGC.

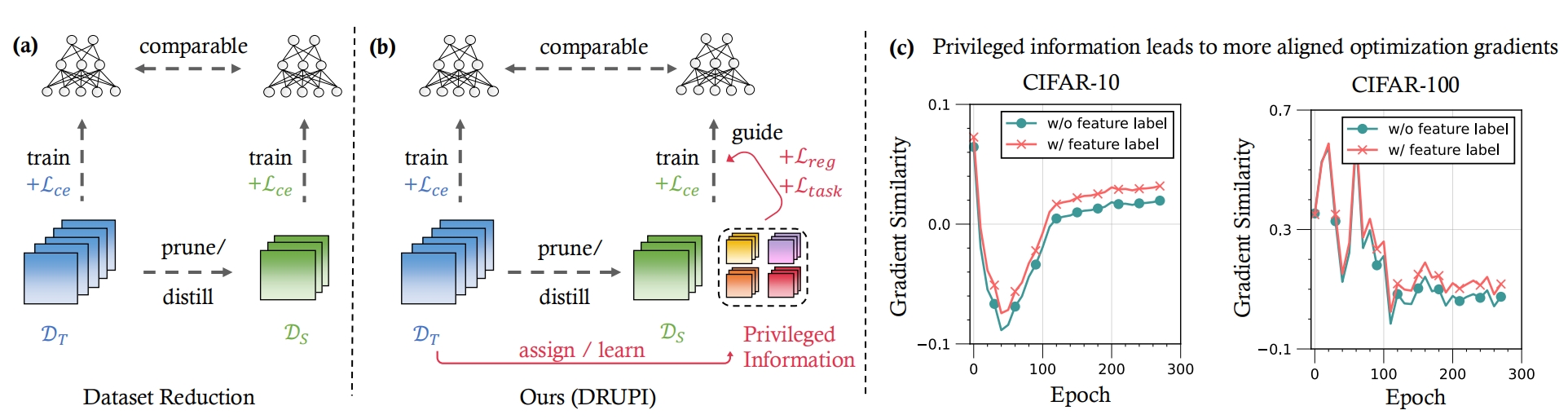

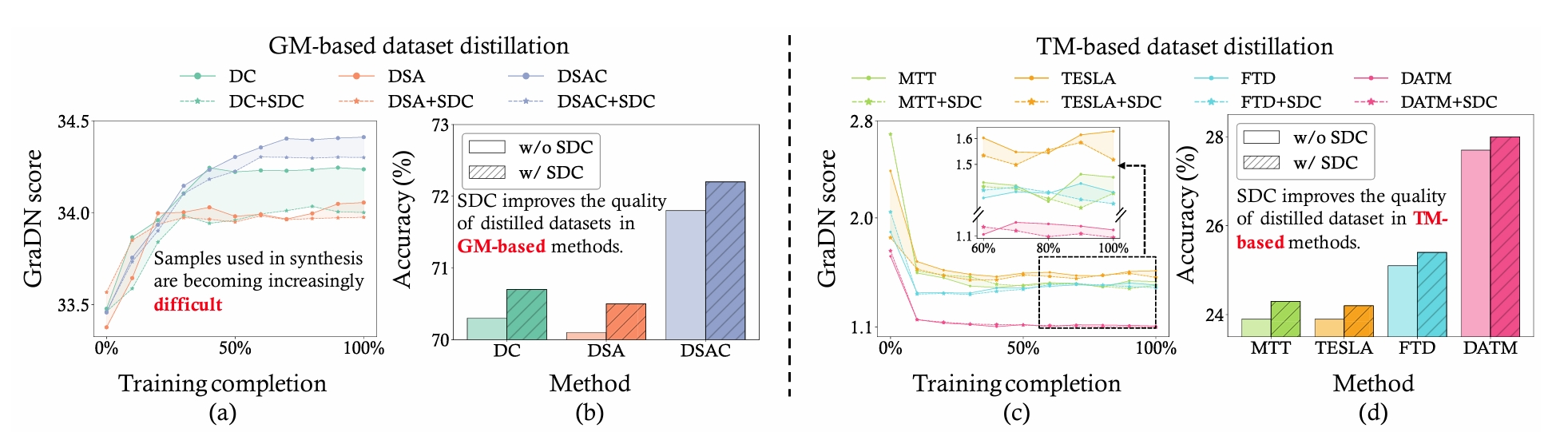

III. Data-Efficient Artificial Intelligence: Current artificial intelligence models require training on a vast amount of data, which significantly increases the training costs of large models. We study how to utilize data more efficiently, clean and synthesize data more scientifically, and use synthetic data to further enhance generative models, leading to data-efficient artificial intelligence.

Reviewing papers for conferences and journals including NeurIPS, ICML, ICLR, CVPR, ECCV, ICCV, AAAI, IJCAI, AISTATS, IEEE TPAMI, IEEE TCSVT, IEEE TIP, IEEE TMI, PR, TACO, Scientific Reports and others.

Area Chair and Guest Editor for conferences and journals including NeurIPS2025, IJCNN2025, ACL2025, Big Data and Cognitive Computing.

December 2024, Huawei Inc., Shanghai, Talk Title: Data-Centric Model Compression.

December 2024, Northeastern University, Shenyang, Talk Title: Accelerating Inference of Untrained Diffusion Models.

December 2024, Huawei Intelligent Car BU AI Youth Forum, Shanghai, Talk Title: Generative Model Compression from the Perspective of Tokens.

December 2024, Shanghai University of Finance and Economics, Shanghai, Talk Title: Generative Model Compression from the Perspective of Tokens.

November 2024, China Agricultural University, Shanghai, Talk Title: Accelerating Inference of AIGC Models Based on Diffusion Models.

April 2024, Huawei Computing Product Line Youth Forum, Hangzhou, Talk Title: Model Compression Based on Knowledge Distillation.

Linfeng Zhang got his Bachelor degree in Northeastern University and then got his Ph.D. degree in Tsinghua Univeristy. Currently, he leads Efficient and Precision Intelligent Computing (EPIC) lab in Shanghai Jiaotong Univeristy.

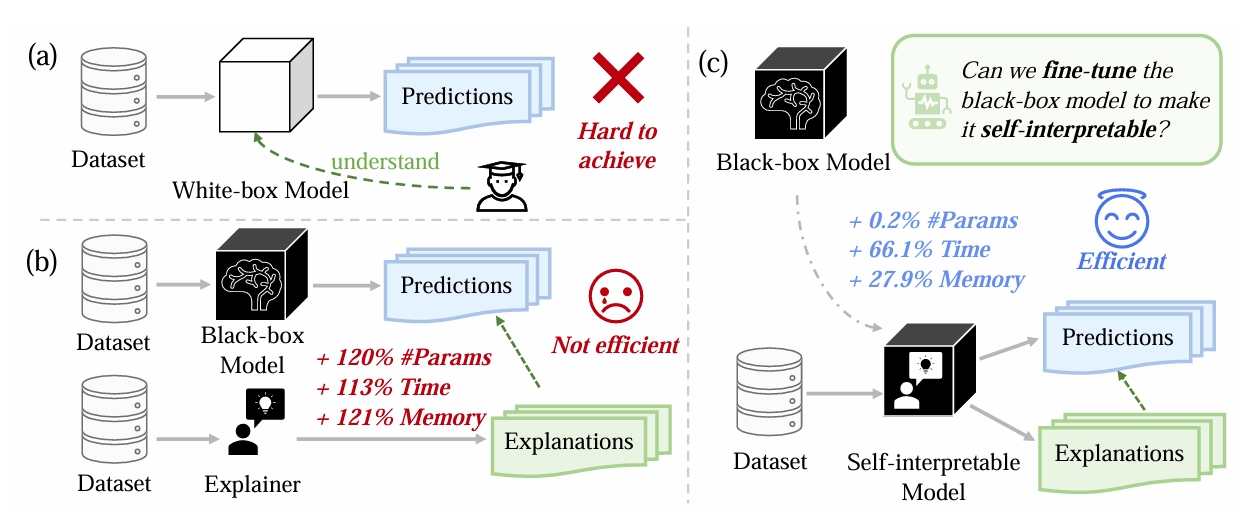

Shaobo Wang is a Ph.D. candidate in the EPIC Lab at SAI, Shanghai Jiao Tong University, starting in 2024. Building on a strong background in efficient AI, explainable AI, and deep learning theory, he focuses his research on data synthesis and data reduction. He is particularly interested in foundation models, striving to understand their intrinsic behavior while making them more data efficient, lightweight, and cost effective in both training and inference.

Yifeng Gao is a master student in EPIC Lab, Shanghai Jiaotong University. His research interests focus on developing capable, reliable and efficient AI with algorithm and computing co-design. Currently, he focus on efficient inference of the multi-step reasoning on large language models as well as their truthworthiness.

Zichen Wen is a Ph.D. student in the EPIC Lab at Shanghai Jiao Tong University, under the supervision of Prof. Linfeng Zhang. He holds a B.S. degree in Computer Science from the University of Electronic Science and Technology of China. During his undergraduate studies, he published multiple research papers in prestigious AI conferences, including AAAI, ACM MM,etc. His research interests lie in Efficient Multi-Modal Large Models and Trustworthy AI, focusing on advancing the efficiency, reliability, and ethical aspects of artificial intelligence systems.

Xuelin Li will begin pursuing a Ph.D. degree at the EPIC Lab in 2025. He is expected to graduate with a Bachelor's degree from the University of Electronic Science and Technology of China (UESTC), where he achieved a perfect GPA: 4.0/4.0 in all courses within the School of Software. During his undergraduate studies, he received numerous awards, including National Scholarship. His research interests focus on developing efficient inference paradigms for trustworthy multimodal large language models.

Zexuan Yan is currently a senior student majoring in Computer Science and Technology at the University of Science and Technology of China, and will join the EPIC Lab of Zhang Linfeng's research group at the School of Artificial Intelligence, Shanghai Jiao Tong University in the fall of 2025. His research interests include multimodal models, AIGC, and diffusion model acceleration.

Chang Zou is currently an undergraduate student at Yingcai Honors College, University of Electronic Science and Technology of China (UESTC), expected to complete his bachelor's degree in 2026. Originally from Chengdu, Sichuan, he doesn’t eat spicy food despite his hometown’s reputation. His primary research focus is on the efficient acceleration of AIGC, particularly Diffusion Models, and he has a solid background in mathematics and physics. In 2024, he began his internship at the EPIC Lab, where, under the guidance of his advisor, Linfeng Zhang, he contributed to submissions for ICLR and CVPR.

Xuyang Liu is currently pursuing his M.S. degree at the College of Electronics and Information Engineering, Sichuan University. He is also a research intern at Taobao & Tmall Group, where he focuses on efficient multi-modal large language models. In 2024, he joined the EPIC Lab as a research intern under the guidance of Prof. Linfeng Zhang, contributing to the development of a comprehensive collection of resources on token-level model compression. His research interests include Efficient AI, covering areas such as discrimination, adaptation, reconstruction, and generation.