张林峰,上海交通大学人工智能学院助理教授,博清华大学交叉信息研究院,曾获世界人工智能大会云帆奖(20人)、微软学者奖学金(亚洲12人),北京市优秀毕业生,清华大学优秀博士论文,并担任ACL、NeurIPS、ICLR等会议的领域主席。他以(共同)第一作者、通讯作者发表在CCF-A类与CAAI-A类等高水平会议期刊上发表论文40篇,被引用超3000次。他的研究成果和工作经历被人民日报、中国青年报、青春上海(上海共青团)、青春北京(北京共青团)等官方媒体专题报导,相关新闻全网浏览超过一亿次。

当前,实验室正在招收博后、本科或研究生的研究助理以及2026/2027级的学生。如果你有兴趣,请发邮件联系我。

在以下学术会议和期刊担任审稿人: NeurIPS, ICML, ICLR, CVPR, ECCV, ICCV, AAAI, IJCAI, AISTATS, IEEE TPAMI, IEEE TCSVT, IEEE TIP, IEEE TMI, IJCV, Pattern Recognition, TACO, Scientific Reports and others.

在以下学术会议和期刊担任领域主席或客座编辑: NeurIPS2025, IJCNN2025, Big Data and Cognitive Computing, ACL2025, EMNLP2025, ICLR2026.

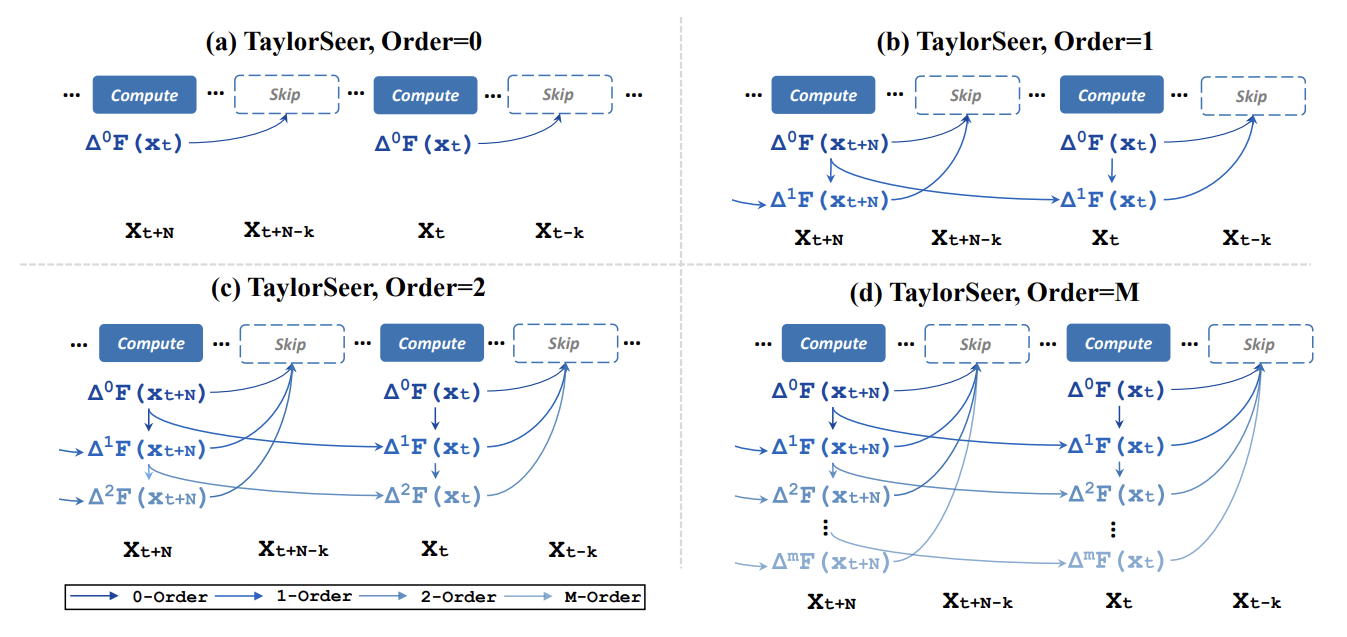

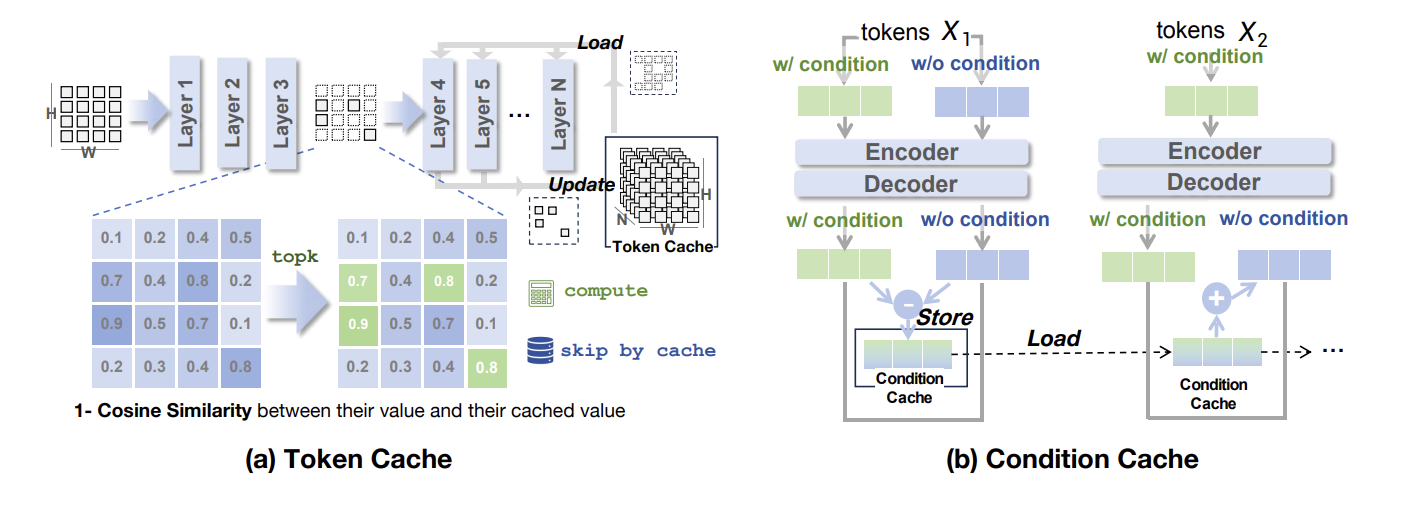

当前的生成式大模型有着百亿量级的参数,导致极高的训练和推理成本。通过研究面向生成式大模型的压缩加速方法,我们可以降低大模型的部署成本,使大模型更好地在现实世界中得到应用。

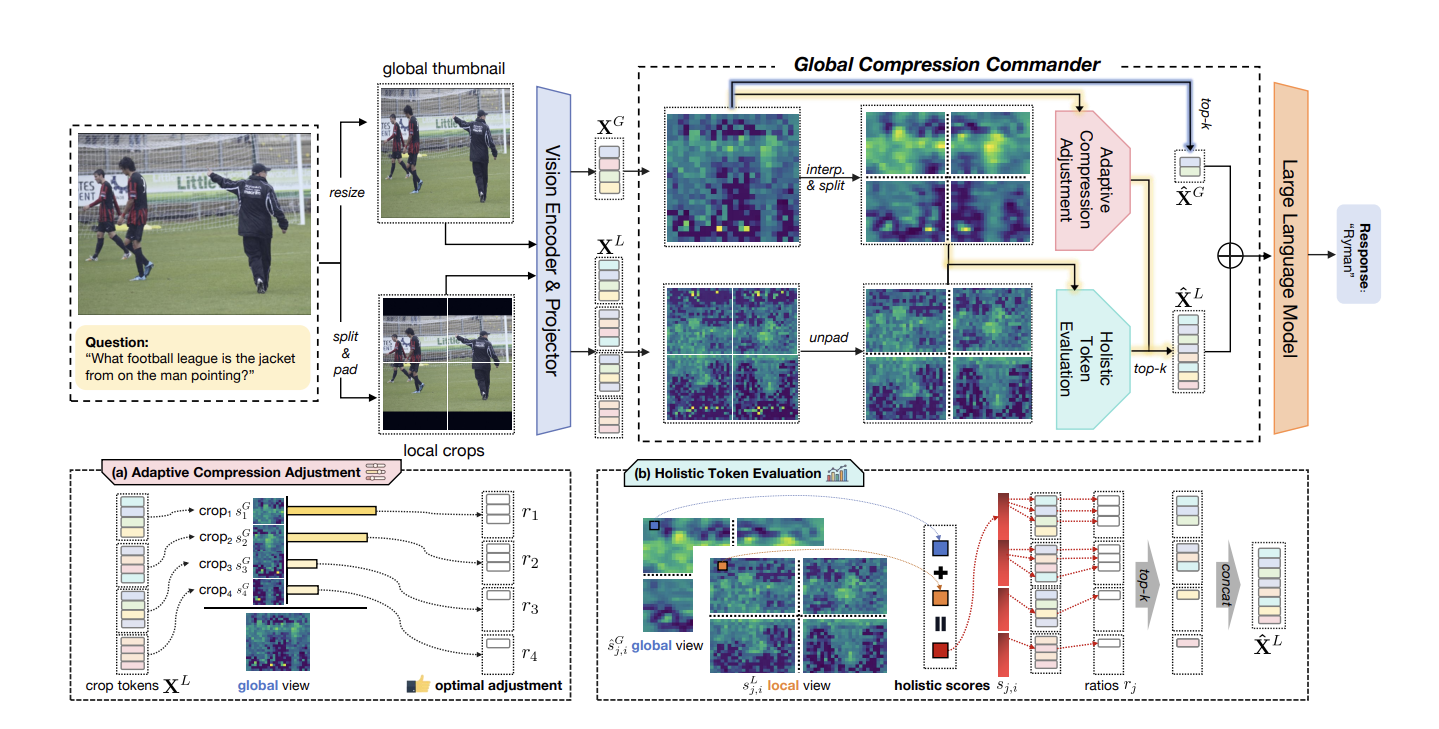

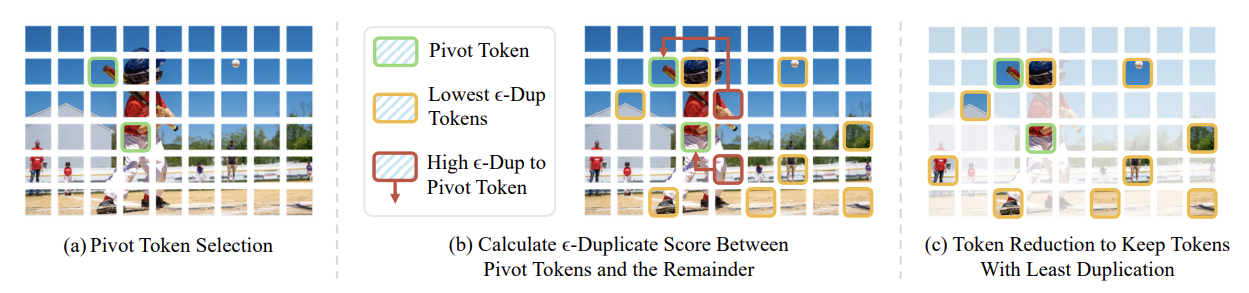

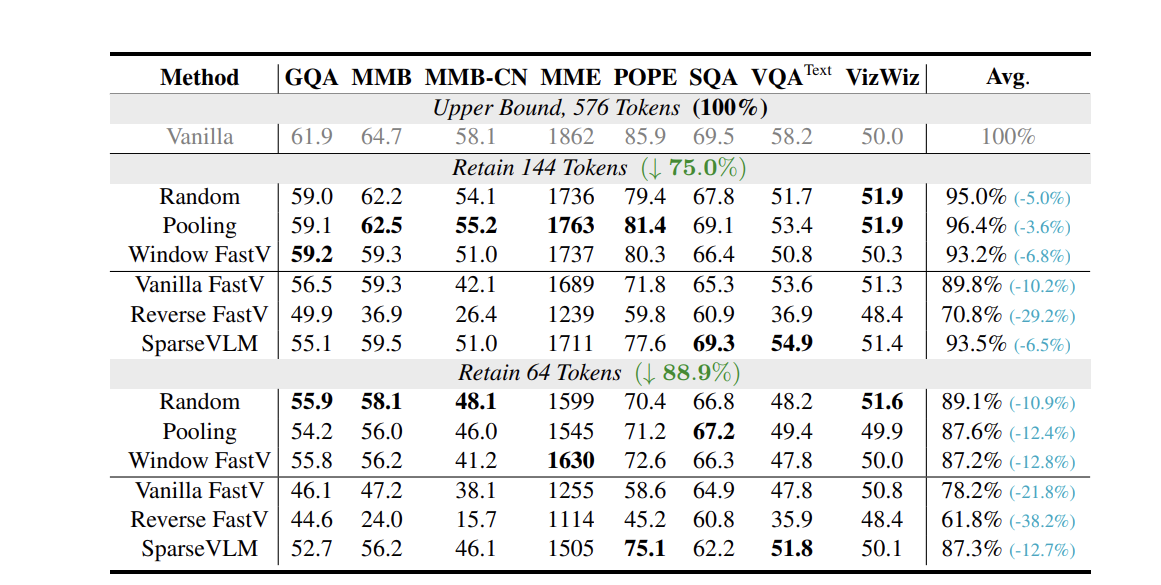

多模态大模型融合了文本、图像、音频和视频数据。我们研究高效处理和生成多模态内容的方法,在降低计算成本的同时保持高质量性能。

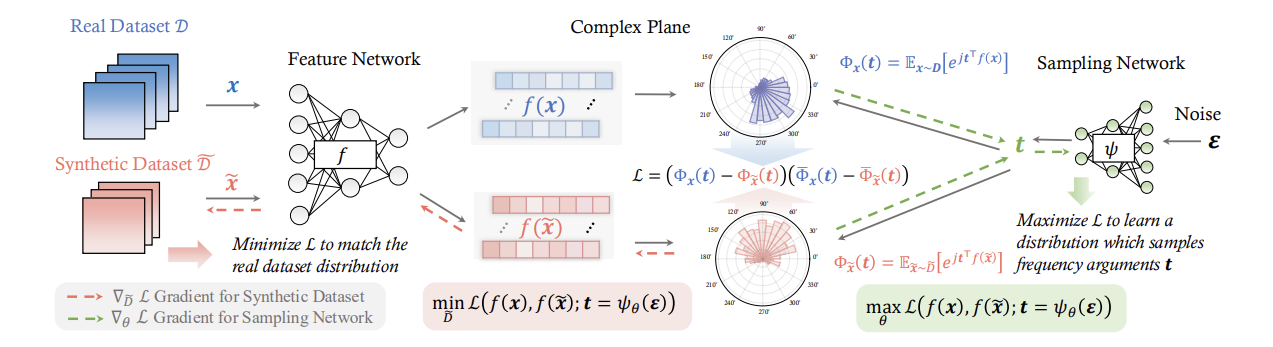

当前的人工智能模型需要在极大量的数据基础上进行训练,这严重提高了大模型的训练成本。如何更加高效地利用数据,更加科学地对数据进行清洗和合成,是通往数据高效人工智能的关键。

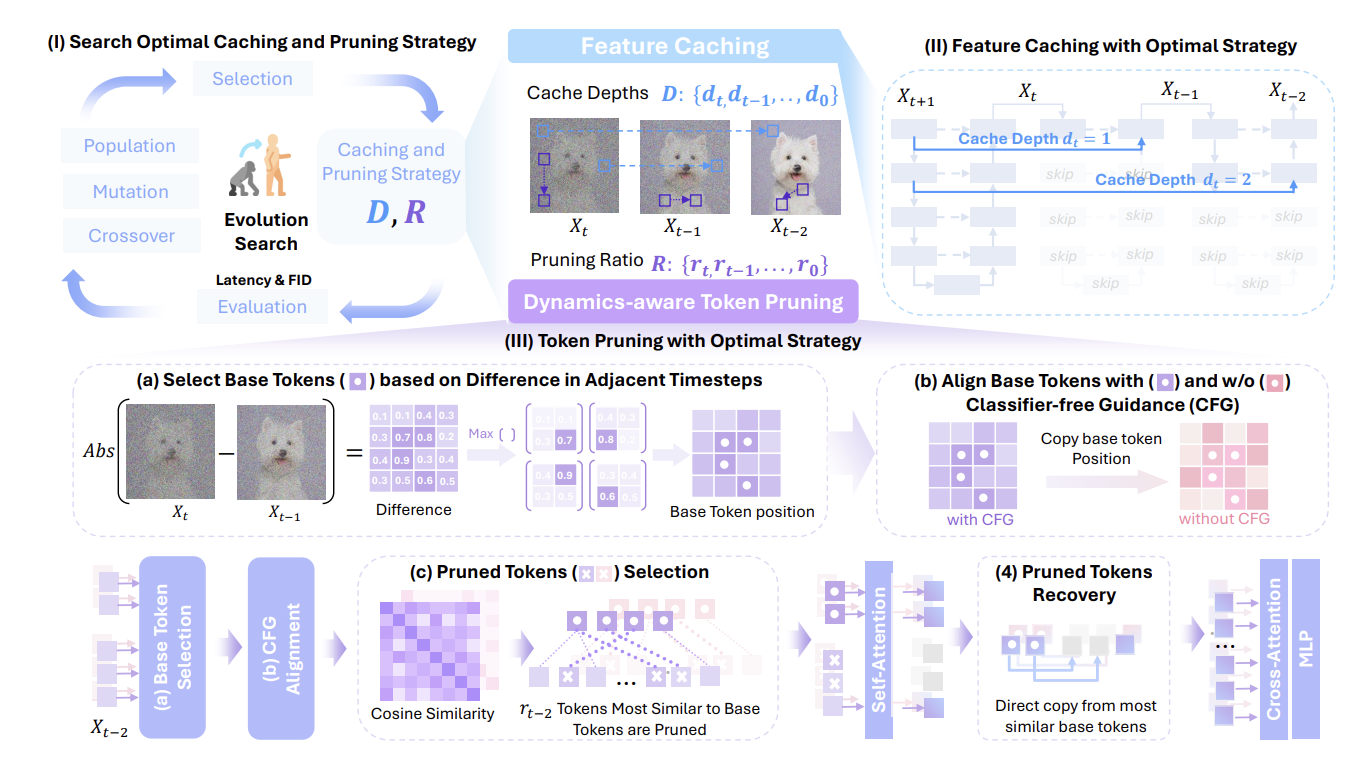

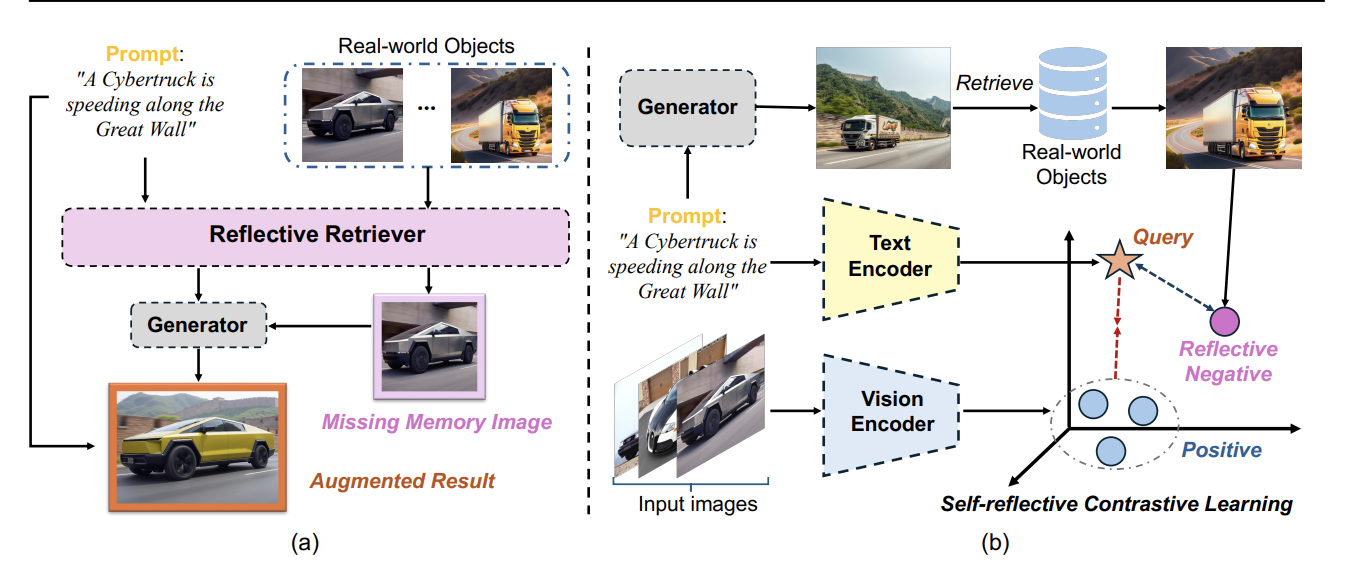

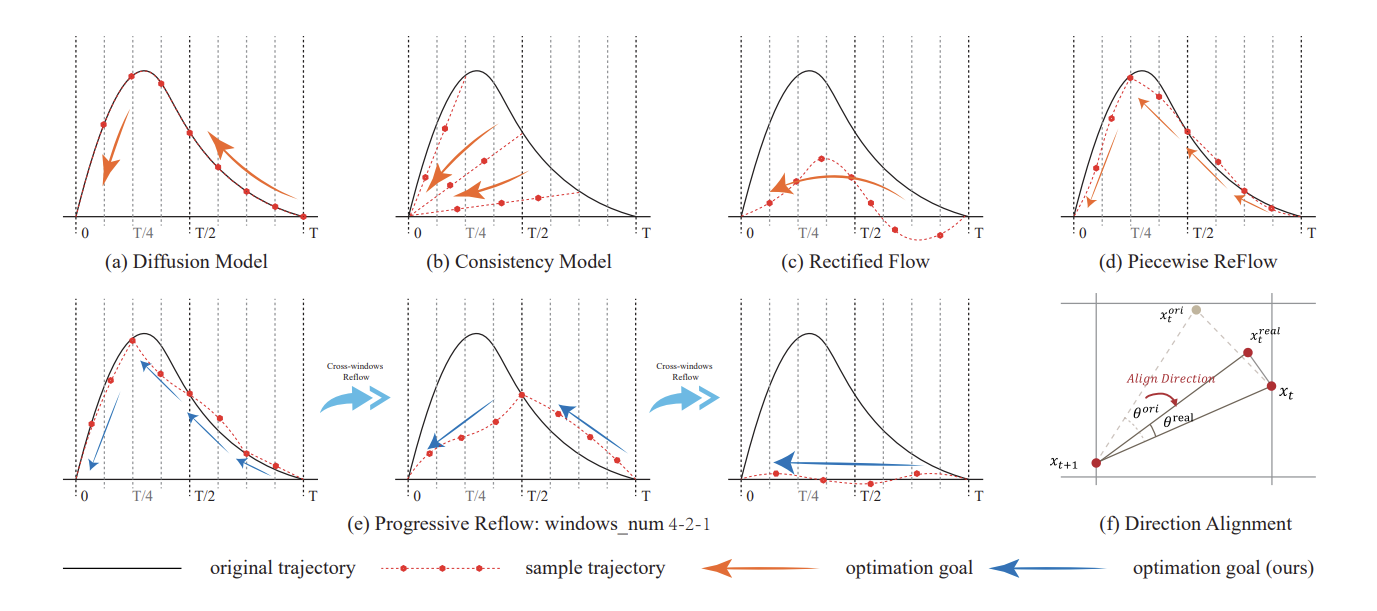

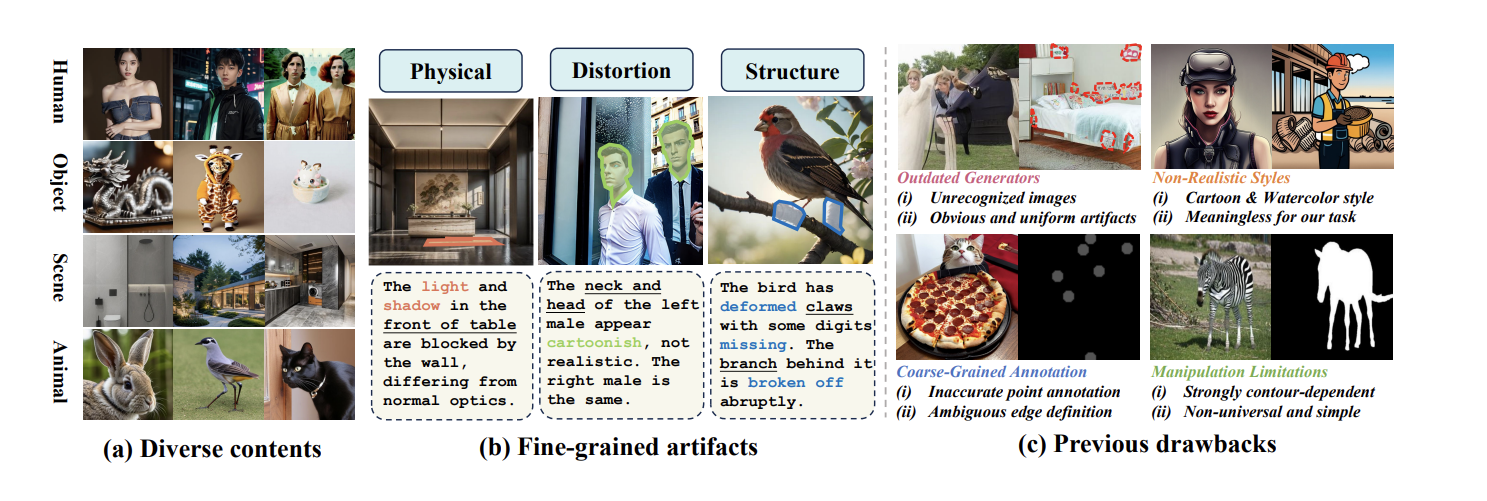

我们研究轻量高效的AIGC模型(扩散模型等),用于文生图、文生视频等多模态生成任务,实现高质量内容生成的同时降低计算成本,推动AIGC产业落地。

研究面向科学研究和工业应用的大模型与多模态大模型的预训练、后训练、数据构建、评测构建。

张林峰在东北大学获得学士学位,随后在清华大学获得博士学位。目前,他在上海交通大学领导高效精准智能计算(EPIC)实验室。

王少博是上海交通大学人工智能研究院EPIC实验室2024级博士研究生。他在高效AI、可解释AI和深度学习理论方面有扎实的背景,主要研究数据合成和数据缩减。

实习经历:阿里Qwen

高奕丰是上海交通大学EPIC实验室的硕士研究生。他的研究兴趣主要是通过算法和计算协同设计开发高效、可靠和强大的人工智能。目前,他专注于大语言模型多步推理的高效推理及其可信度研究。

温子辰是上海交通大学EPIC实验室的博士研究生,师从张林峰教授。他在中国电子科技大学获得计算机科学学士学位。在本科期间,他在AAAI、ACM MM等知名AI会议上发表了多篇研究论文。他的研究兴趣包括高效多模态大模型和可信AI,专注于提升人工智能系统的效率、可靠性和伦理方面。

实习经历:Kimi,上海AI Lab

李学林于2025年在EPIC实验室开始攻读博士学位。他预计将在中国电子科技大学获得学士学位,在软件工程学院的所有课程中取得了4.0/4.0的完美GPA。在本科期间,他获得了包括国家奖学金在内的多项奖项。他的研究兴趣主要是开发可信多模态大语言模型的高效推理范式。

实习经历:蚂蚁

闫泽轩于2025年秋季加入上海交通大学人工智能学院张林峰研究组的EPIC实验室。他的研究兴趣包括多模态模型、AIGC和扩散模型加速。

实习经历:小红书,阿里

邹暢目前是中国电子科技大学英才学院的本科生,预计2026年完成学士学位。他来自四川成都,但尽管家乡以辣闻名,他却不吃辣。他的主要研究方向是AIGC的高效加速,特别是扩散模型,并且在数学和物理方面有扎实的基础。2024年,他开始在EPIC实验室实习,在导师张林峰的指导下,参与了ICLR和CVPR的论文投稿工作。

实习经历:腾讯混元(青云计划)

刘旭洋目前在四川大学电子信息学院攻读硕士学位。他同时也是淘宝天猫集团的研究实习生,专注于高效多模态大语言模型。2024年,他加入EPIC实验室成为研究实习生,在张林峰教授的指导下,参与了词元级模型压缩综合资源库的开发。他的研究兴趣包括高效AI,涵盖判别、自适应、重建和生成等领域。

实习经历:淘宝、蚂蚁、Oppo

魏清研是EPIC实验室的新成员,专注于高效深度学习语言模型和AIGC模型的研究。她的研究兴趣包括为各种应用开发轻量级和高性能的AI系统。

实习经历:腾讯

留嘉城是山东大学的本科生,将于2026年开始在上海交通大学攻读博士学位。他将加入EPIC实验室,专注于高效生成模型的研究。他的研究主要集中在两个协同领域:高效扩散模型,旨在优化推理并降低计算成本;以及世界模型,特别针对交互式视频生成和长时推理。他致力于构建更快、能够生成可控的扩展视觉环境的模型。在"简洁与深度"的理念指导下,他偏爱优雅、逻辑连贯的解决方案,并追求安静而强大的想法。

实习经历:腾讯(青云计划)

陈爽是2026级硕士研究生。他的研究兴趣聚焦于多模态大语言模型的推理加速,尤其关注如何在保持模型性能的前提下提升效率。他致力于探索模型压缩、词元剪枝和优化推理流水线等技术,以实现多模态系统的可扩展与实用部署。

王一宇将于2026年加入EPIC实验室,在张林峰教授指导下攻读博士学位。他当前的研究聚焦于多模态大模型、视频理解与流式视频智能体,旨在提升大模型在长视频与实时视频场景中的推理能力。

吕原汇一是香港科技大学(广州)人工智能专业博士生。他此前在东北大学获得人工智能学士学位,目前研究方向包括理解生成统一模型与多模态生成。

程泽港是香港科技大学(广州)2026级博士研究生,作为联培学生加入EPIC Lab。他此前在纽约大学获得计算机科学硕士学位,当前研究方向包括高效生成模型与大模型强化学习。

杨奕存目前是哈尔滨工业大学软件工程专业大四学生,将于2026年秋季起在EPIC Lab攻读博士学位。当前研究方向包括扩散语言模型的预训练扩展与推理加速。

实习经历:蚂蚁

金湘祺是电子科技大学信息与软件工程学院2022级本科生,将于2026年秋季起在EPIC Lab攻读博士学位。他目前主要研究高效大语言模型,并积极探索大模型强化学习与智能体方向。