Linfeng Zhang is an assistant professor at the School of Artificial Intelligence, Shanghai Jiao Tong University, and received his Ph.D. from the Institute for Interdisciplinary Information Sciences at Tsinghua University. He has won the World Artificial Intelligence Conference Cloud Sail Award (top 20), Microsoft Fellowship (12 in Asia), Beijing Outstanding Graduate, and Tsinghua University Outstanding Doctoral Dissertation. He also serves as an Area Chair for conferences such as ACL, NeurIPS, and ICLR. He has published 40 high-quality papers in CCF-A and CAAI-A level conferences and journals as (co-)first author or corresponding author, with over 3000 citations. His research achievements and work experience have been featured in official media outlets including People's Daily, China Youth Daily, Youth Shanghai (Shanghai Communist Youth League), and Youth Beijing (Beijing Communist Youth League), with related news receiving over 100 million views online.

Our laboratory is recruiting postdoctoral researchers, undergraduate or graduate research assistants, and students for the class of 2026/2027. If you are interested, please email me.

Reviewing papers for conferences and journals including NeurIPS, ICML, ICLR, CVPR, ECCV, ICCV, AAAI, IJCAI, AISTATS, IEEE TPAMI, IEEE TCSVT, IEEE TIP, IEEE TMI, PR, TACO, Scientific Reports and others.

Area Chair and Guest Editor for conferences and journals including NeurIPS2025, IJCNN2025, ACL2025, EMNLP2025, ICLR2026, Big Data and Cognitive Computing.

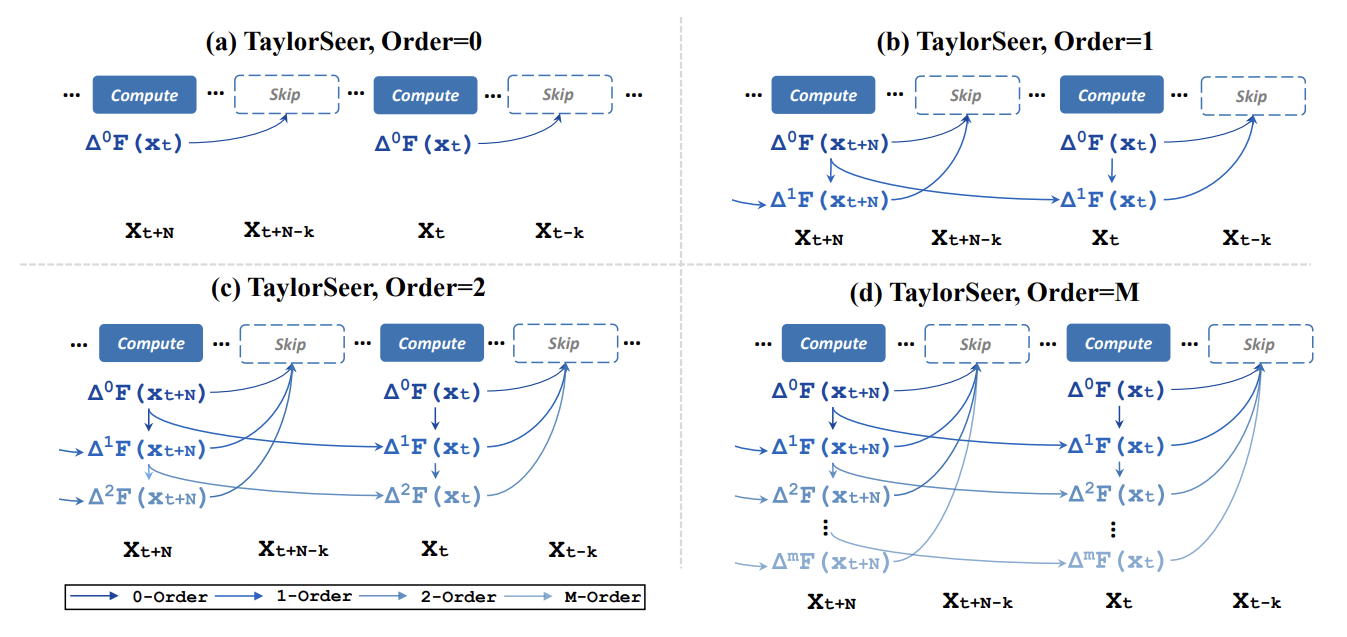

The current generative large models have billions of parameters, leading to extremely high training and inference costs. By researching compression and acceleration methods, we can reduce deployment costs and enable better real-world utilization of large models.

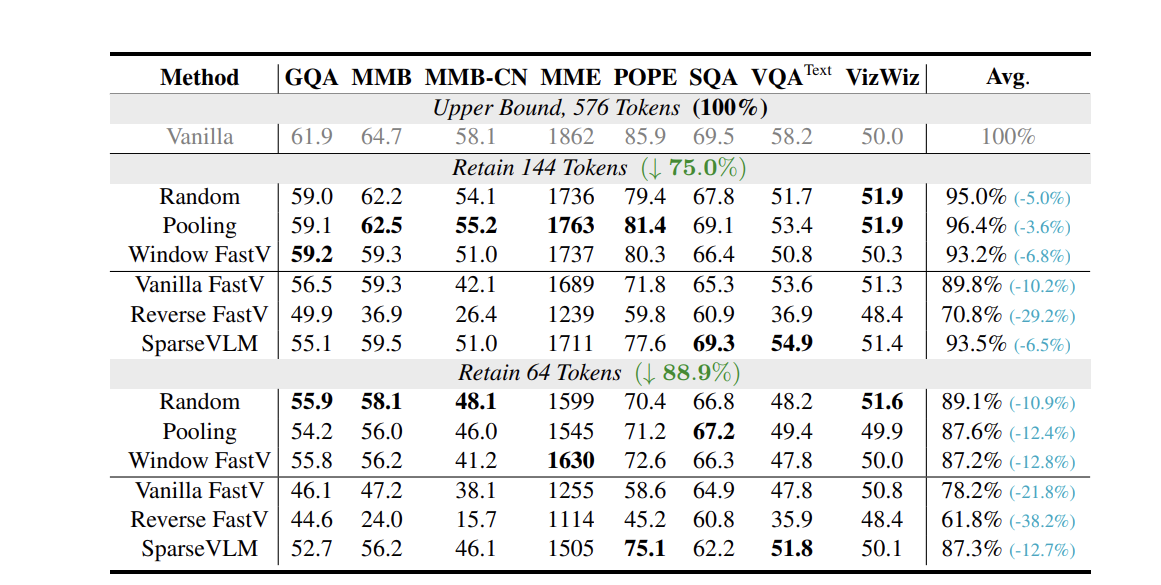

Multimodal large models integrate text, image, audio, and video data. We research efficient methods to process and generate multimodal content, reducing computational costs while maintaining high-quality performance.

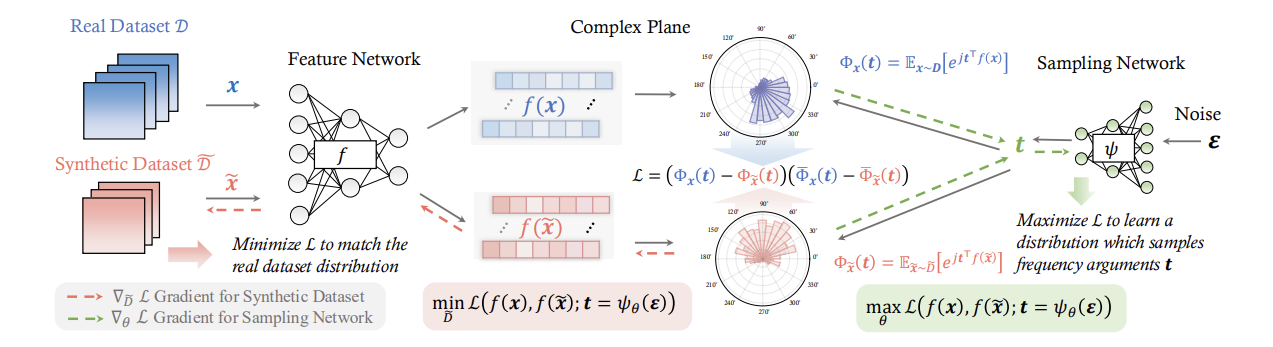

Current AI models require massive amounts of data for training. We study how to utilize data more efficiently through data cleaning, synthesis, and intelligent sampling, reducing training costs while improving model performance.

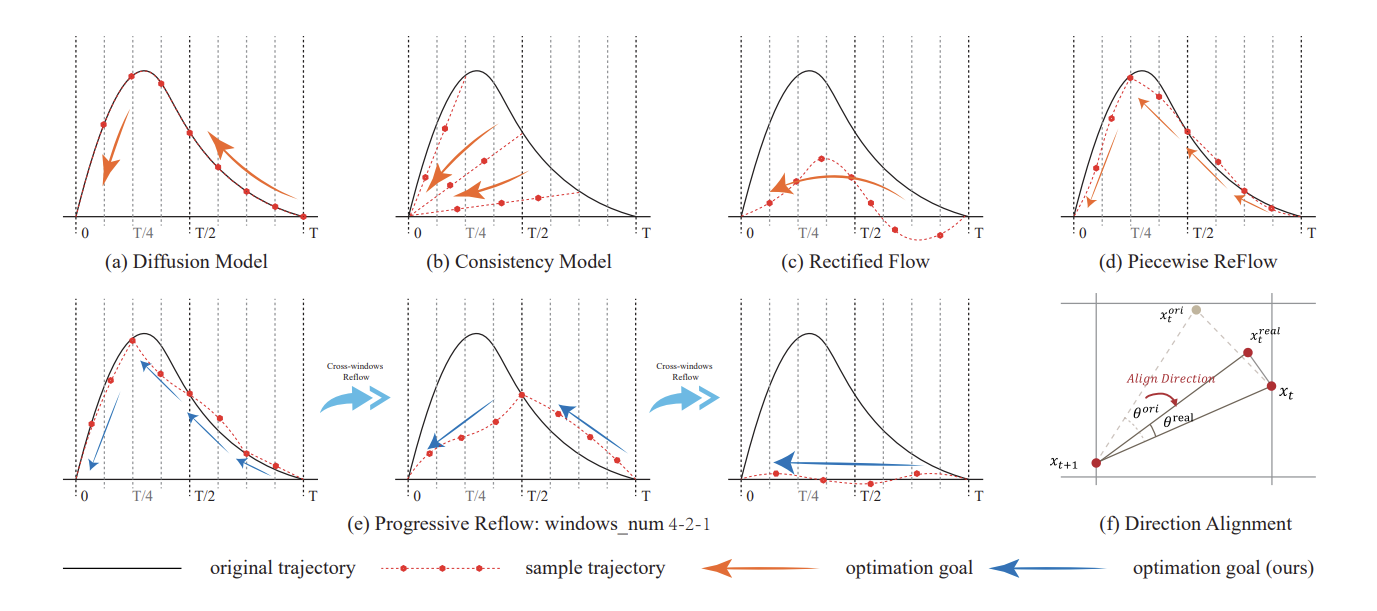

We develop lightweight and efficient AIGC models for text-to-image, text-to-video, and other multimodal generation tasks, enabling high-quality content generation with lower computational costs.

We research pre-training and post-training methods for large models and multimodal large models, focusing on applications in scientific research and industrial settings.

Linfeng Zhang got his Bachelor degree in Northeastern University and then got his Ph.D. degree in Tsinghua Univeristy. Currently, he leads Efficient and Precision Intelligent Computing (EPIC) lab in Shanghai Jiaotong Univeristy.

Shaobo Wang is a Ph.D. candidate in the EPIC Lab at SAI, Shanghai Jiao Tong University, starting in 2024. Building on a strong background in efficient AI, explainable AI, and deep learning theory, he focuses his research on data synthesis and data reduction.

Internship Experience: Alibaba Qwen

Yifeng Gao is a master student in EPIC Lab, Shanghai Jiaotong University. His research interests focus on developing capable, reliable and efficient AI with algorithm and computing co-design.

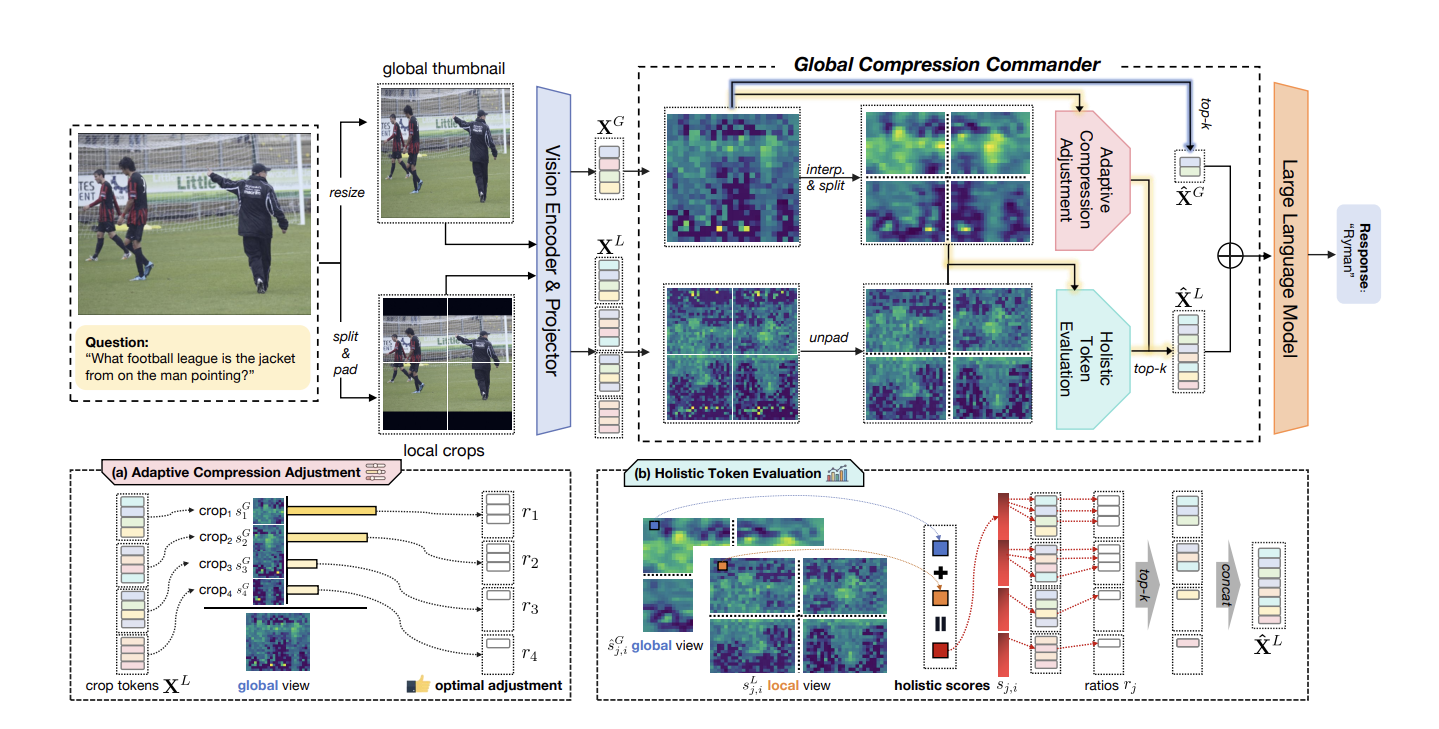

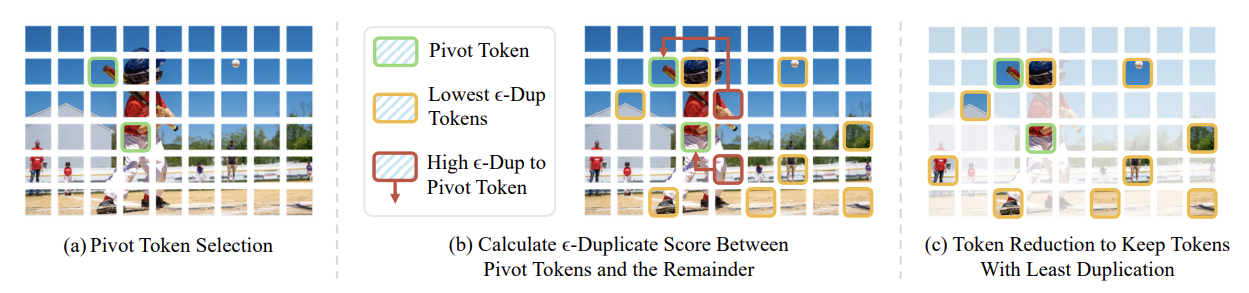

Xuelin Li pursues a Ph.D. degree at the EPIC Lab. He graduates with a Bachelor's degree from the University of Electronic Science and Technology of China (UESTC), where he achieved a perfect GPA: 4.0/4.0 in all courses within the School of Software. During his undergraduate studies, he received numerous awards, including National Scholarship. His research interests focus on developing efficient inference paradigms for trustworthy multimodal large language models.

Internship Experience: Ant Group

Zichen Wen is a Ph.D. student in the EPIC Lab at Shanghai Jiao Tong University, under the supervision of Prof. Linfeng Zhang. He holds a B.S. degree in Computer Science from the University of Electronic Science and Technology of China. During his undergraduate studies, he published multiple research papers in prestigious AI conferences, including AAAI, ACM MM,etc. His research interests lie in Efficient Multi-Modal Large Models and Trustworthy AI, focusing on advancing the efficiency, reliability, and ethical aspects of artificial intelligence systems.

Internship Experience: Kimi, Shanghai AI Lab

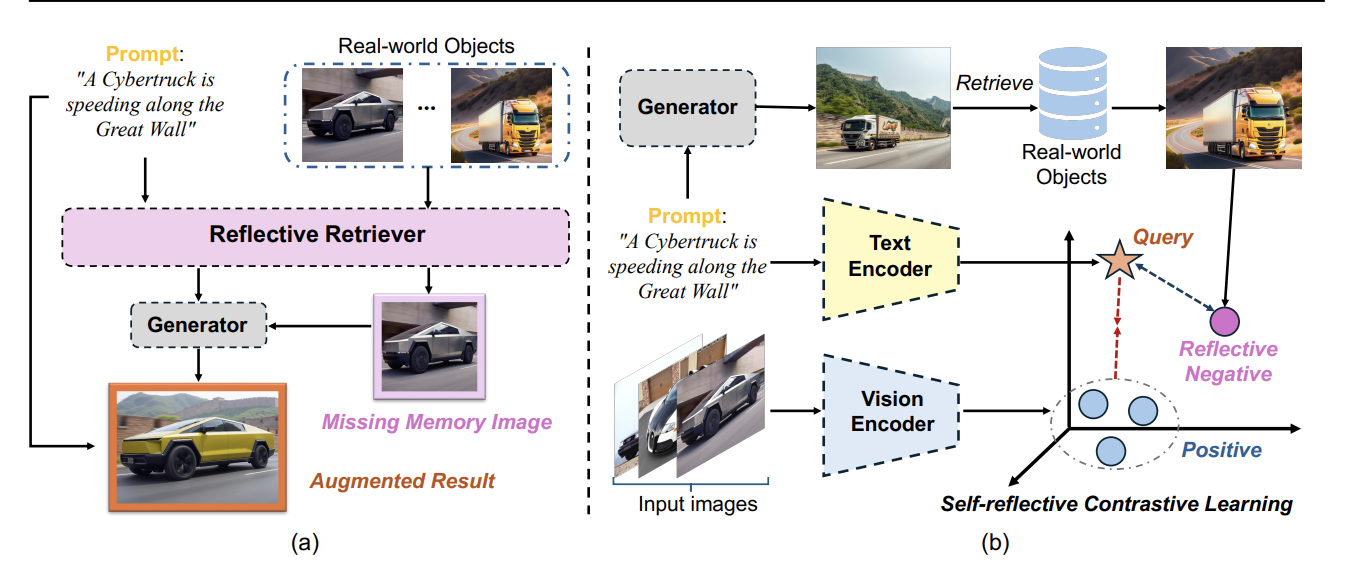

Zexuan Yan joins the EPIC Lab of Zhang Linfeng's research group at the School of Artificial Intelligence, Shanghai Jiao Tong University. His research interests include multimodal models, AIGC, and diffusion model acceleration.

Internship Experience: Xiaohongshu, Alibaba

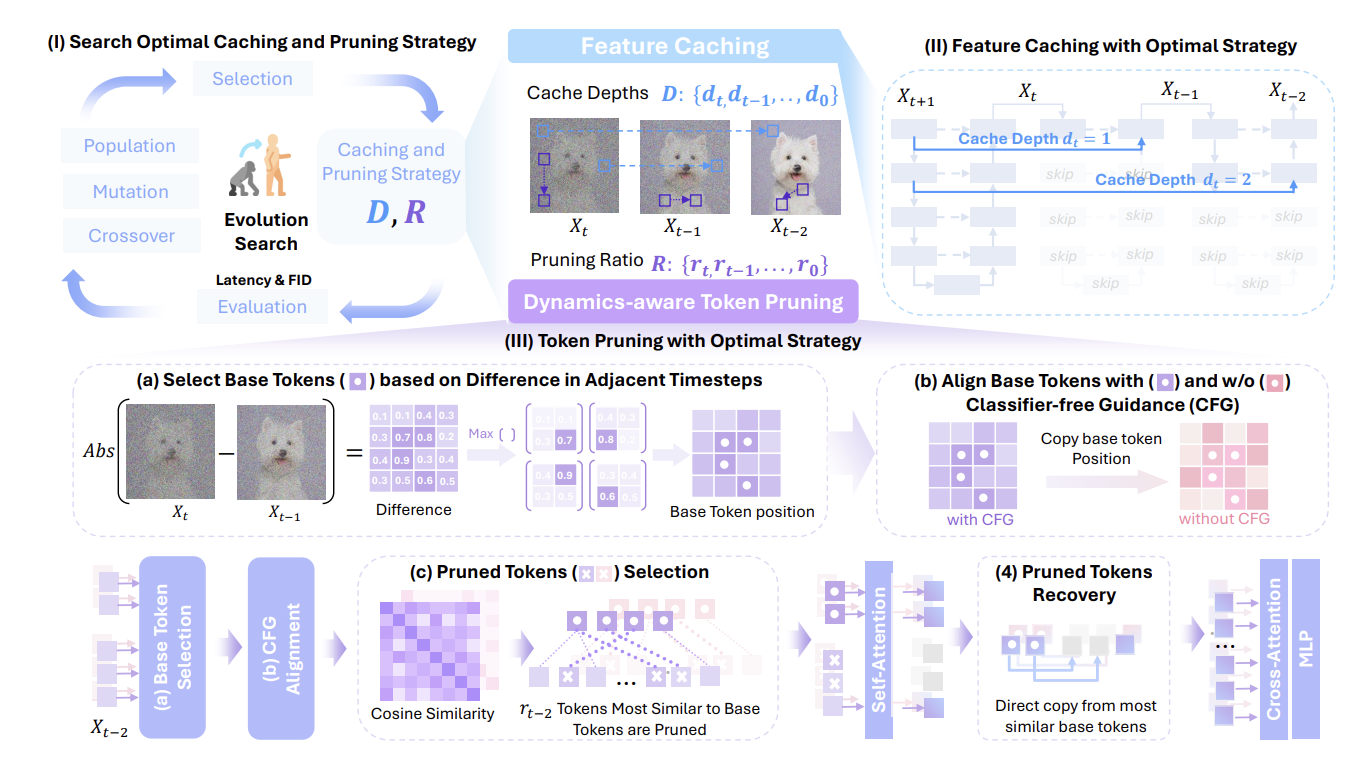

Chang Zou is currently an undergraduate student at Yingcai Honors College, University of Electronic Science and Technology of China (UESTC), expected to complete his bachelor's degree in 2026. Originally from Chengdu, Sichuan, he doesn’t eat spicy food despite his hometown’s reputation. His primary research focus is on the efficient acceleration of AIGC, particularly Diffusion Models, and he has a solid background in mathematics and physics. In 2024, he began his internship at the EPIC Lab, where, under the guidance of his advisor, Linfeng Zhang, he contributed to submissions for ICLR and CVPR.

Internship Experience: Tencent Hunyuan (Qingyun Program)

Xuyang Liu is currently pursuing his M.S. degree at the College of Electronics and Information Engineering, Sichuan University. He is also a research intern at Taobao & Tmall Group, where he focuses on efficient multi-modal large language models. In 2024, he joined the EPIC Lab as a research intern under the guidance of Prof. Linfeng Zhang, contributing to the development of a comprehensive collection of resources on token-level model compression. His research interests include Efficient AI, covering areas such as discrimination, adaptation, reconstruction, and generation.

Internship Experience: Taobao, Ant Group, Oppo

Qingyan Wei is a new member of the EPIC Lab, focusing on efficient deep learning language models and AIGC models. Her research interests include developing lightweight and high-performance AI systems for various applications.

Internship Experience: Tencent

Jiacheng Liu is an Undergraduate student from Shandong University and an Incoming Ph.D. candidate at Shanghai Jiao Tong University, starting in 2026. He will join the EPIC Lab, focusing on efficient generative models. His research centers on two synergistic pillars: Efficient Diffusion, aiming to optimize inference and reduce computational costs, and World Models, specifically targeting interactive video generation and long-horizon reasoning. He is dedicated to building models that are faster and capable of controllable, extended visual environments. Guided by a philosophy of "simplicity with depth," he favors elegant, logically coherent solutions and is driven by the pursuit of quiet, powerful ideas.

Internship Experience: Tencent (Qingyun Program)

Shuang Chen is a master's student admitted in 2026. His research interests lie in inference acceleration for multimodal large language models, with a particular focus on improving efficiency while maintaining model performance. He is interested in exploring techniques such as model compression, token pruning, and optimized inference pipelines to enable scalable and practical deployment of multimodal systems.

Yiyu Wang will join the EPIC Laboratory in 2026 to pursue a Ph.D. under the supervision of Professor Linfeng Zhang. His current research focuses on multimodal large models, video understanding, and streaming video agents, aiming to enhance the reasoning capabilities of large models in long-form and real-time video scenarios.

Yuanhuiyi Lyu is a Ph.D. student in Artificial Intelligence at The Hong Kong University of Science and Technology (Guangzhou). He received his B.Eng. in Artificial Intelligence from Northeastern University. His current research focuses on unified understanding-generation models and multimodal generative models.

Zegang Cheng is an incoming Ph.D. student at The Hong Kong University of Science and Technology (Guangzhou) in 2026, joining the EPIC Lab as a joint-supervision student. He previously earned an M.S. in Computer Science from New York University. His current research interests include efficient generative models and reinforcement learning.

Yicun Yang is currently a senior undergraduate student majoring in Software Engineering at Harbin Institute of Technology (HIT). He will join the EPIC Lab as a joint-supervision Ph.D. student at HKUST(GZ) in Fall 2026. His research focuses on scaling pre-training for diffusion language models and accelerating their inference.

Internships: Antgroup

Xiangqi Jin is an undergraduate student (Class of 2022) at the School of Information and Software Engineering, University of Electronic Science and Technology of China (UESTC). He will join the EPIC Lab as a joint-supervision Ph.D. student at HKUST(GZ) in Fall 2026. His research focuses on efficient large language models, with active exploration in LLM-based reinforcement learning and LLM agents.

Liang Feng’s long-term research question is: “How can intelligence grow and scale, while remaining precisely calibrated to the complexity of the real world?” He currently studies how to use Agents to synthesize data, improve LLM performance in scientific domains, and build the benchmarks that science needs. He believes synthetic data is essential for LLM self-learning and unbounded growth, while well-designed benchmarks serve both as calibration tools and as ultimate rewards in reinforcement learning frameworks.

Internships: Alibaba, ByteDance

paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper paper

paper